Agentic AI: Using Tool Calling to Go Beyond RAG

This is Part 2 of a three-part series:

- Part 2: Using Tool Calling to Go Beyond RAG

- Part 3: Agentic AI in Production (Coming soon!)

“Agentic AI” is one of today’s most popular AI terms. But what does it actually mean?

At its core, Agentic AI describes systems that can make decisions and perform tasks on their own. They don’t just respond—they take action. That autonomy comes from two capabilities:

- Decision-making: choosing what to do next

- Taking action: performing the task

For humans, we can easily act on our decisions. For large language models (LLMs), it doesn’t have that same capability. An LLM can reason about what should happen, but it can’t take action unless we give it a way to interact with the world.

That bridge is tool calling—the mechanism that turns a passive LLM into an active, task-performing agent.

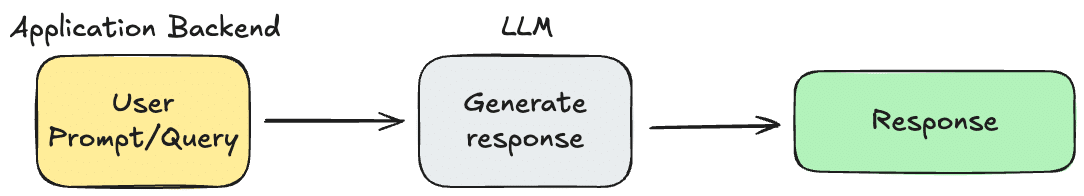

Standard LLM Workflow

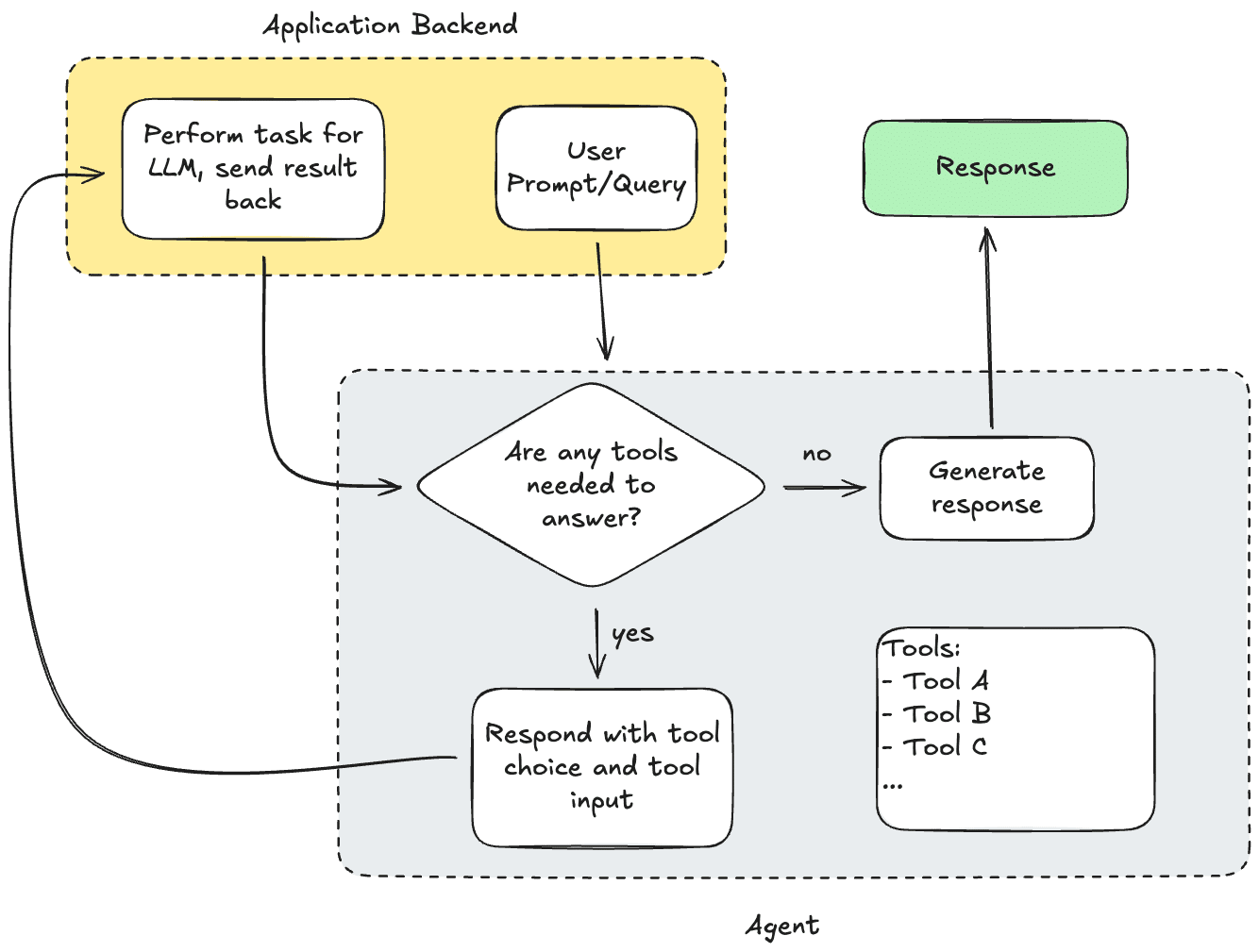

Agentic LLM Workflow With Tool Calling

What Is Tool Calling?

If Agentic AI is about helping LLMs act, tool calling is how they do it. Tool calling allows an LLM to use external functions or APIs (tools) to perform real-world tasks based on its reasoning. Instead of returning only text, the LLM can decide which tool to use, call it with specific inputs, and then continue reasoning using the results.

How tool calling works

- Give the LLM a list of tools it has access to, along with the required inputs for each tool.

- Let the LLM decide which tool to use.

- When the LLM responds, it “calls” one of these tools, which your application then executes.

- The result from the tool call is sent back to the LLM so it can generate a final response.

Putting it into practice

Consider the question: “What’s the weather today?”

An LLM doesn’t know the current weather. But if you give it a “getWeather” tool:

- The LLM responds with a call to "getWeather."

- We return the result from our "getWeather" tool.

- The LLM synthesizes a natural language response for the user.

Taking tool calling a step further

Agents build on this by using recursive tool calling to achieve their results:

- The agent receives a list of tools.

- The agent decides which tool to use.

- We return the tool’s result.

- The agent then decides to either (a) continue using another tool, or (b) stop and provide a final response.

Tips for Better Tool Calling

Tool calling isn’t just about giving an LLM access to functions. You can also shape the LLM’s behavior:

Add structured arguments

For example, a "reasoning" field will make the LLM explain why it wants to use a tool, even for non-reasoning models.

Use parallel calls

If the LLM can’t do parallel execution natively, create a 'wrapper tool' that gathers inputs for multiple functions at once, and handles the parallelization as part of the function.

These techniques can improve both clarity and speed.

Very Cool Tool Calling Example

(Say that 3 times fast!)

Automating dependency mapping

One of our clients needed to extract many different values from documents. Each value had its own instructions, often like this:

Value A can be found in Section X. If Value A is found, set Value B to ‘yes.’

This instruction means B depends on A.

Mapping a few dependencies by hand is simple. Mapping hundreds—written in natural language and full of cross-references—is not.

Instead of doing it manually, we used tool calling to:

- Feed each value’s description to the LLM

- Let the LLM generate dependencies as structured outputs

- Build a dependency graph

- Run a topological sort on that graph

- Return the sorted order to the LLM

- Let the LLM determine which values could be extracted in parallel

The result: a fully automated process for untangling human-written rules, and outputting a dependency tree that helped parallelize extractions.

Tool Calling vs. Structured Output

These two ideas work together, but they solve different problems:

- Structured output creates a predictable format for the LLM’s response.

- Tool calling gives the LLM a way to gather information or take action before producing that response.

In practice, you often use tool calls to collect what you need for the agent—and structured output to produce the final answer in a format fit for use outside of the agent.

Using Agents vs. Hard-Coded Tool Calls

Tool calling can be thought of as an expanded form of RAG. Instead of retrieving only documents, you’re giving the LLM access to any external capability. There are two ways to design this:

Approach 1: Pre-defined tool calls

You decide exactly which tools to call before sending your prompt to the LLM. You execute these tools as needed, and enrich the prompt with context from the pre-determined calls.

Pros:

- Predictable behavior

- Fixed cost

- Fast execution

Cons:

- No flexibility

- The LLM can’t explore better inputs or alternative approaches

An example of this approach is basic RAG: you use a specific query to gather relevant documents, enrich your context with this data, and send it to the LLM.

Approach 2: Let the LLM decide

You give the LLM a list of tools, and their specifications, and let it choose.

Pros:

- Highly flexible

- Can adapt to unexpected situations

Cons:

- Harder to control

- More expensive (more LLM calls, more tokens)

- Slower

Agents shine in complex, open-ended workflows—but they must be managed carefully. An example of this approach is to give the LLM a ‘RAG tool’, and let the LLM decide when to use the tool, and with what inputs.

Planning Tool Calls

LLMs don’t naturally reveal their thought process unless you use a dedicated reasoning model. But tool calling gives us a workaround. You can:

- Ask the LLM to draft a plan before calling any tools

- Use a reflection step where the LLM reviews previous actions and adjusts

- Create a second agent whose only job is to critique the first

- Set hard limits on tool calls and tokens

- Filter message history to keep size under control

Good agents aren’t just powerful—they’re monitored and constrained.

Keeping Costs Under Control

Left unchecked, an agent can call tools endlessly to verify its own logic. To prevent runaway behavior:

- Cap tool calls and/or token usage

- Filter the message history

- Deduplicate repeated information

- Add extra arguments that force the LLM to summarize previous steps or tool calls

The goal is to keep the agent smart, efficient, and affordable.

The Bottom Line

Tool calling unlocks the “agentic” in Agentic AI. It lets LLMs make decisions, take action, and operate beyond their training data. But with that power comes the need for clear controls, thoughtful design, and cost-aware engineering.

Used well, tool calling turns LLMs from passive responders into active problem-solvers—capable of navigating complex tasks, coordinating multiple steps, and producing reliable, actionable outcomes.

If you’re building anything more ambitious than a single-prompt chatbot, tool calling is the key to taking your system beyond RAG and into true Agentic AI.

Let’s talk about how we can turn your AI ideas into measurable results.

At OneSix, we design and deploy AI systems built for the real-world. We engineer context, optimize retrieval, and integrate AI into your workflows—so your models deliver accurate, reliable, measurable results.

Written by

Matt Altberg, Lead ML Engineer

Published

November 19, 2025