Snowflake Cortex Search vs. Custom RAG

Choosing the Right Approach for Enterprise AI

Enterprise adoption of AI is moving quickly, but leaders face a critical question: how do we ground large language models (LLMs) in enterprise data while keeping solutions scalable, accurate, and cost-effective?

Snowflake’s Retrieval-Augmented Generation (RAG) and enterprise search solution, Cortex Search, and custom RAG pipelines represent two different approaches to solving that challenge. Understanding how they work—and when each is the right fit—is essential for any organization investing in enterprise-ready AI.

The debate begins with a shared starting point: RAG enables LLMs to leverage enterprise data effectively.

What is RAG?

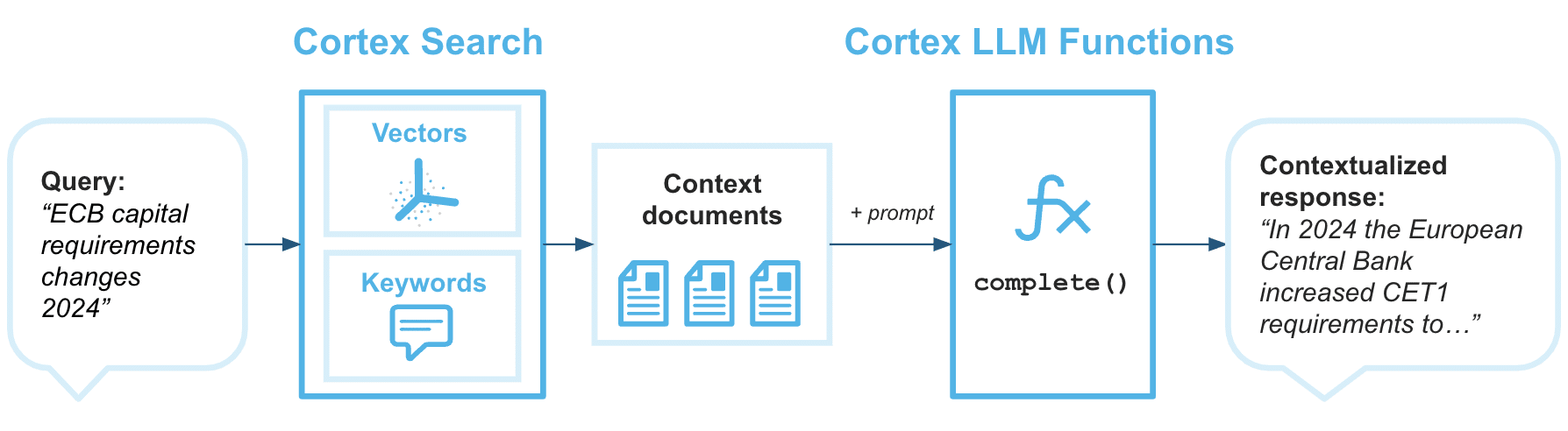

Retrieval-Augmented Generation (RAG) is the process of giving an LLM access to relevant, external information so it can answer queries more accurately.

The typical RAG workflow looks like this:

- User query: A user asks a question.

- Retrieval: A system searches a knowledge base for relevant information.

- Augmentation: The retrieved content is combined with the query and sent to the LLM.

- Generation: The LLM uses the context to produce an answer.

The value of RAG is that it allows standard, off the shelf models to deliver high-quality, context-aware answers based directly off of your data—whether it’s the latest company policy, current product details, or niche industry knowledge.

Why RAG Matters Now

Enterprise AI adoption is accelerating, but models alone are not enough. RAG has become essential because it:

- Grounds responses in truth by citing relevant documents.

- Keeps answers fresh with access to current business data without having to regularly retrain a model.

- Improves compliance & personalization by tailoring context to users and enforcing access controls.

In other words, RAG is the bridge between broad LLM capability and business-specific intelligence.

The Rise of Enterprise-Ready AI

Snowflake Cortex arrives at a moment when enterprise AI adoption is shifting from experimentation to scale. According to Gartner’s Emerging Tech Impact Radar: Generative AI (2025), one trends stand out that will fundamentally change how enterprises adopt and operationalize AI: AI marketplaces will reshape how enterprises buy AI.

By 2028, 40% of enterprise purchases of AI assets—models, training data, and tools—will be made through AI marketplaces, up from less than 5% in 2024. This shift will make AI assets more accessible, but it also introduces new questions:

- Which models can you trust?

- How do you govern data and IP when assets are purchased externally?

- How do you ensure integration into your enterprise stack isn’t just plug-and-play, but strategic?

As enterprises weigh these decisions, the opportunity is clear: managed services like Cortex Search make it faster than ever to get started, but selecting the right approach—and understanding the tradeoffs—remains critical to long-term success.

The Big Question

With both Cortex Search and custom RAG pipelines available, leaders face a critical decision: When should you use Cortex Search, and when does a custom RAG pipeline make more sense?

The Case for Cortex Search

For many enterprises already running on Snowflake, Cortex Search offers the fastest path to RAG. It delivers a “batteries included” experience with embedding, chunking, document parsing, and auto-updates handled natively inside the Snowflake Data Cloud.

Strengths

- Fast setup: Launch a proof of concept in a day using simple SQL, without new infrastructure.

- Snowflake-native pipeline: Runs entirely inside Snowflake for simpler ops, monitoring, and data security.

- Built-in app layer: Streamlit is included for quickly developing and hosting simple web apps.

- Auto-managed vector database: Indexes and storage handled automatically with direct Snowflake access.

- Text and document utilities: Parse and chunk common file types for easy retrieval prep.

- Extensible with Python: Customize with UDFs and Snowpark; scale to SPCS for advanced use cases.

- Path to customization: Extend with custom RAG or external APIs while keeping compute in Snowflake.

Best Fit For

Cortex Search is best suited for teams that:

- Want to demonstrate value quickly with pilots or POCs with a clear path towards scalable production deployment.

- Prefer a Snowflake-native approach with minimal infrastructure to manage.

- Need a high quality, low-maintenance way to enable search across enterprise data.

Key Considerations

When evaluating Cortex Search, organizations should consider:

- Is text-based search sufficient for current needs, or will multimodal retrieval become important in the future?

- Do your scaling requirements align with managed service thresholds today, and how might they evolve over time?

- How critical is ingestion speed for your workflows, and are built-in parsing tools sufficient to support those needs?

These considerations highlight the importance of aligning the right approach to the right use case, helping organizations get the most out of Cortex Search today and in the future.

The Case for Custom RAG

While Cortex Search is designed to cover a wide range of enterprise use cases, some organizations encounter requirements that go beyond its current scope. In those cases, a custom RAG architecture may be the right fit.

Strengths

- Flexibility: Support for multimodal content such as text, images, audio, and video.

- Optimized strategies: Fine-tuned chunking, embedding, scoring, and retrieval pipelines to maximize accuracy.

- Regional and model choice: Ability to deploy in preferred regions and integrate with specific LLMs.

Best Fit For

Custom RAG is best suited for enterprises that:

- Require multimodal retrieval (e.g., analyzing documents, images, and voice data together).

- Need complex or domain-specific pipelines that go beyond text search.

- Operate in regulated industries or geographies where model choice and data locality matter.

Tradeoffs

The tradeoff for flexibility is complexity. Custom RAG requires:

- Dedicated engineering and infrastructure.

- Ongoing maintenance and monitoring.

- Higher upfront investment to design, implement, and scale.

Choosing the Right Approach

Enterprises evaluating RAG often face a decision between Cortex Search, Snowflake’s managed turnkey option, and a Custom RAG architecture built for flexibility and control.

The right choice depends on the end goal: aligning the approach to the use case ensures organizations get the most value from their investment.

This comparison can be viewed from two angles: (1) feature differences such as setup, scaling, and control, and (2) evaluation criteria that guide leaders in choosing the best fit for their priorities.

Cortex Search vs. Custom RAG

Setup, scale, and control at a glance.

| Feature | Cortex Search | Custom RAG |

|---|---|---|

| Setup | Turnkey (SQL functions, auto-updates) | Complex (vector DB, pipelines, orchestration) |

| Supported Data | Text (PDF, DOCX, JPG, PNG → text only), 512 tokens | Multi-modal (text, image, audio, video) |

| Scaling | 100M chunks max | Unlimited, depends on infra |

| Control | Limited, black-box | Full flexibility |

| Best Use | Rapid POCs, Snowflake-native apps | Custom enterprise AI, domain-specific |

A Hybrid Approach

For many enterprises, the best path is not either/or, but both. Cortex Search provides a fast, Snowflake-native way to launch retrieval-augmented applications with minimal setup. As needs grow — more data types, domain-specific performance, or advanced retrieval strategies — a custom RAG architecture can extend those foundations without starting over.

Aligning Approach to Use Case

The key is alignment: matching the approach to the use case. Whether the priority is speed, scale, or specialization, organizations can maximize value by choosing the right starting point and planning for future flexibility.

The Path Forward

Every enterprise’s journey with AI looks different. Whether you start with Cortex Search, scale with Custom RAG, or combine both, the key is choosing an approach that aligns to your business goals. That’s where OneSix comes in.

Written by

Osman Shawkat, Senior ML Scientist

Published

September 22, 2025

Expert Guidance,

Real-World Implementation

We help enterprises choose the right AI approach and make it real. Our team of senior engineers and PhD-trained scientists designs, deploys, and scales solutions that deliver impact fast.

Contact Us