What is Embedded Analytics?

Published

July 25, 2022

With modern data analytics, businesses have improved their operational efficiency, removed costly bottlenecks, and driven revenue growth. They are using dashboards within business intelligence platforms to make data-driven decisions.

However, there are often missed opportunities to drive better results through access to data insights during day-to-day activities. Dashboards and key insights are locked within business intelligence platforms. If employees have to toggle between applications to understand the state of the business, they are missing out on important data to make informed decisions that have a big impact on the bottom line.

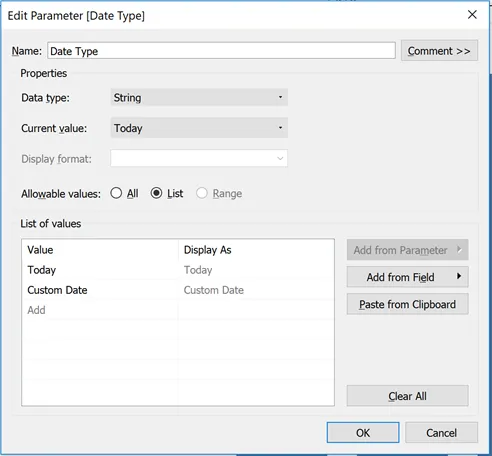

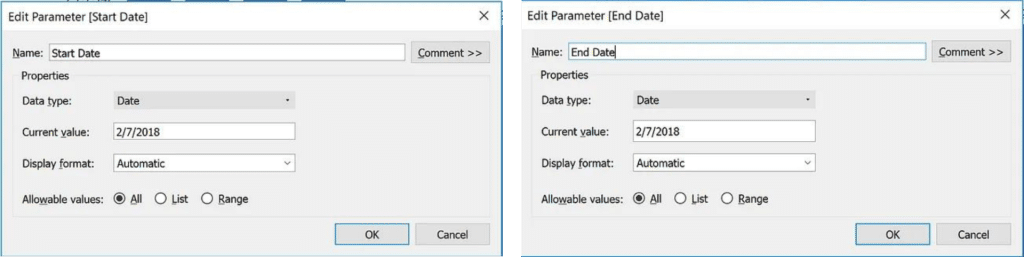

Embedded analytics places these insights at the point of need, inside portal and workflow applications, and makes it possible for users to take immediate action without needing to leave the context of their day-to-day work. It drives efficiencies within workflows and significantly increases user adoption of your analytics investments.

Integrating data analytics into web applications allows the business to take immediate actions on insights that may currently be hidden in reporting applications.

Why consider embedded analytics?

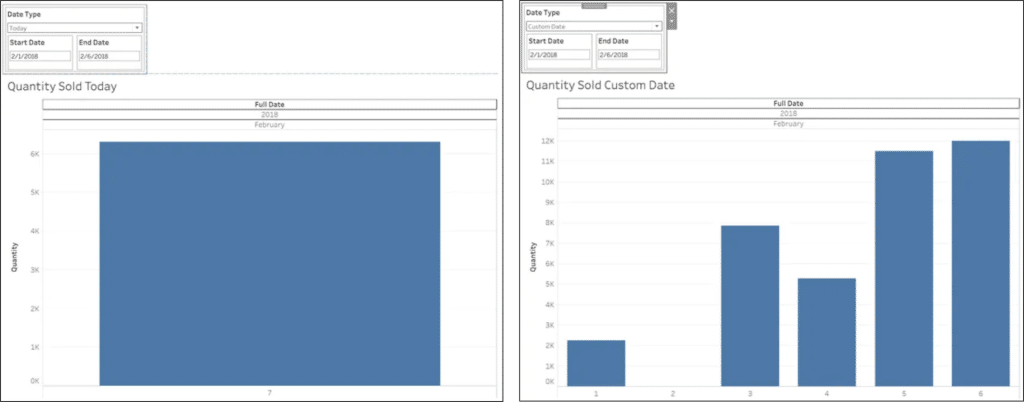

1. Actionable Insights, where action can be taken By embedding analytics into your user’s workflow, decisions can be made by taking the state of the business into consideration. Analytics can be gleaned in the context of your workflow, allowing for better, more informed decision-making.

2. Creates a culture of data-driven decisions With insights no longer hidden in analytics applications, users are empowered to make decisions based on factual data, not opinion, leading to better business outcomes and reducing the risk of bad or uninformed decisions.

3. Improved data governance By giving your internal applications the ability to embed analytics from a single source of analytics, you reduce the risk of multiple implementations of the same insight or calculation. All users of your data will now see the same validated and centrally governed data analysis that they can trust.

4. Better user experience Traditional analytics applications offer a streamlined way to build reports and dashboards, embedded analytics takes those concepts to the next level by allowing them to create a seamless experience in other applications used by the business, whether they are web applications or mobile apps.

5. New revenue opportunities Embedded analytics allows businesses to monetize their data by offering analytics access for their customers inside their product offerings or creating upsell opportunities.

How to get started with

Embedded Analytics

Getting the best possible business results with embedded analytics requires the right technology stack for your business, and experience integrating data and applications.

One Six Solutions consultants are experienced in both building web-based workflow applications as well as delivering on your data strategy. We are uniquely positioned to deliver embedded analytics that allows your business to make more informed decisions quickly and efficiently.

If you would like to see how we can help with your embedded analytics project, contact us today for a Free Consultation