Behind the Booth: How We Delivered a Live AI Experience at Snowflake Summit

Written by

Ryan Lewis, Sr. Lead Consultant

Published

June 13, 2025

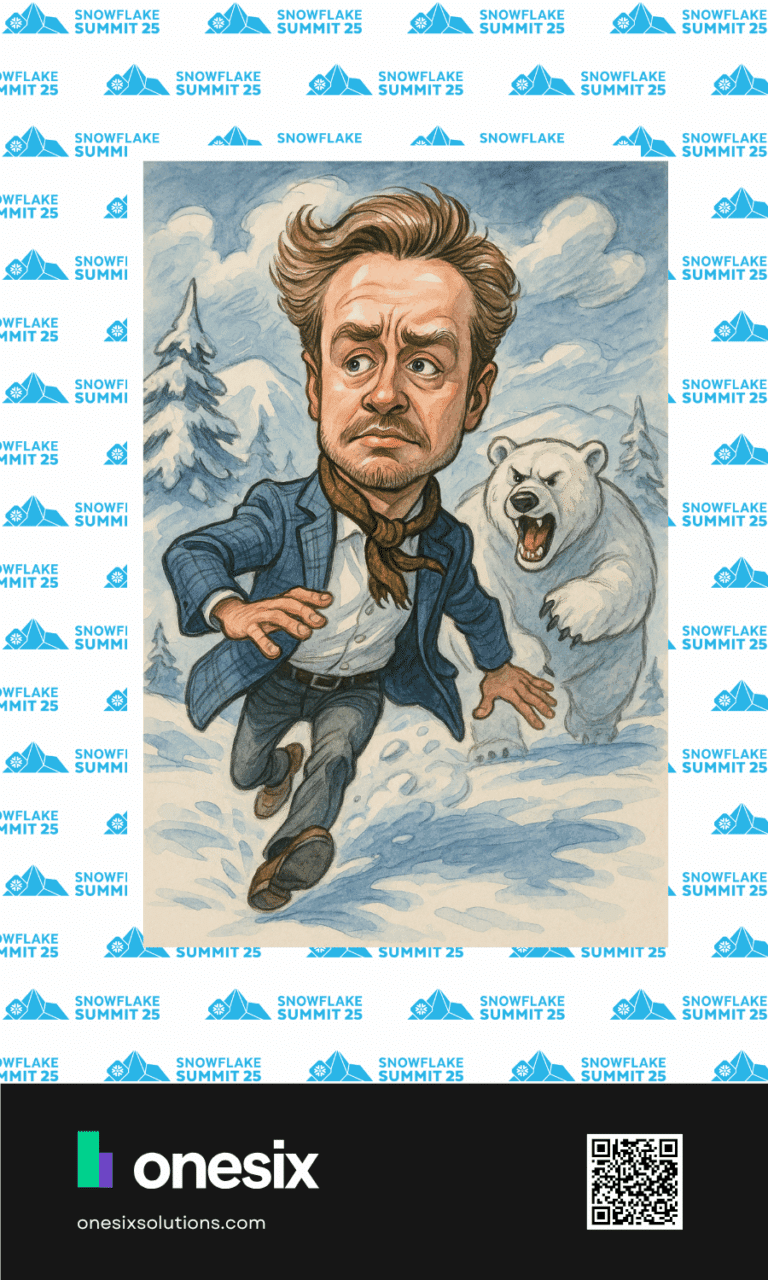

We wanted to do more than just hand out stickers at this year’s Snowflake Summit. We wanted to create an experience—something fun, immersive, and hands-on that brought the power of AI, Snowflake, and real-time analytics to life.

So we built a two-part AI solution that combined facial recognition, mood detection, and instant dashboarding. The result? A booth where attendees could get a custom AI-generated caricature and learn how advanced tools like Snowflake, Landing AI, and Sigma come together to power intelligent experiences.

The Big Idea: Fun Meets Function

We set out to show what’s possible when modern data and AI tools are thoughtfully combined. Our booth experience worked like this:

1

Snap a Photo

Attendees had their photo taken at the booth.

2

Generate a Caricature

The image was sent to an AI service to create a playful caricature.

3

Analyze Your Mood

At the same time, the photo was sent to a custom mood detection model powered by LandingLens (via Snowflake) to classify your expression.

4

See the Results in Real Time

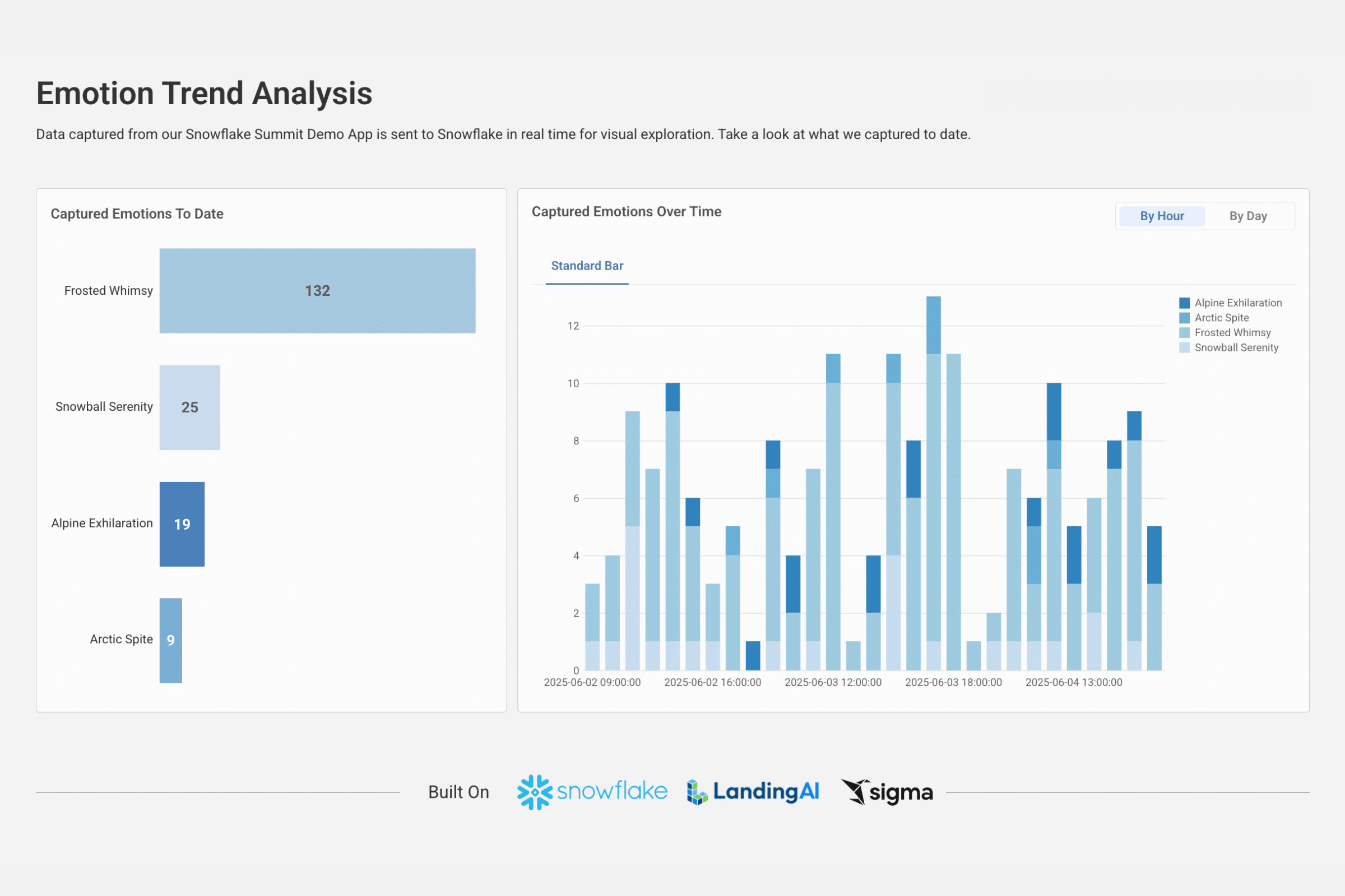

Your mood data was logged in Snowflake and instantly visualized on a Sigma dashboard alongside aggregated visitor insights.

5

Take Home the Fun

Every participant got a printed caricature to keep—and a great story to share on LinkedIn.

Behind the Scenes: How It All Worked

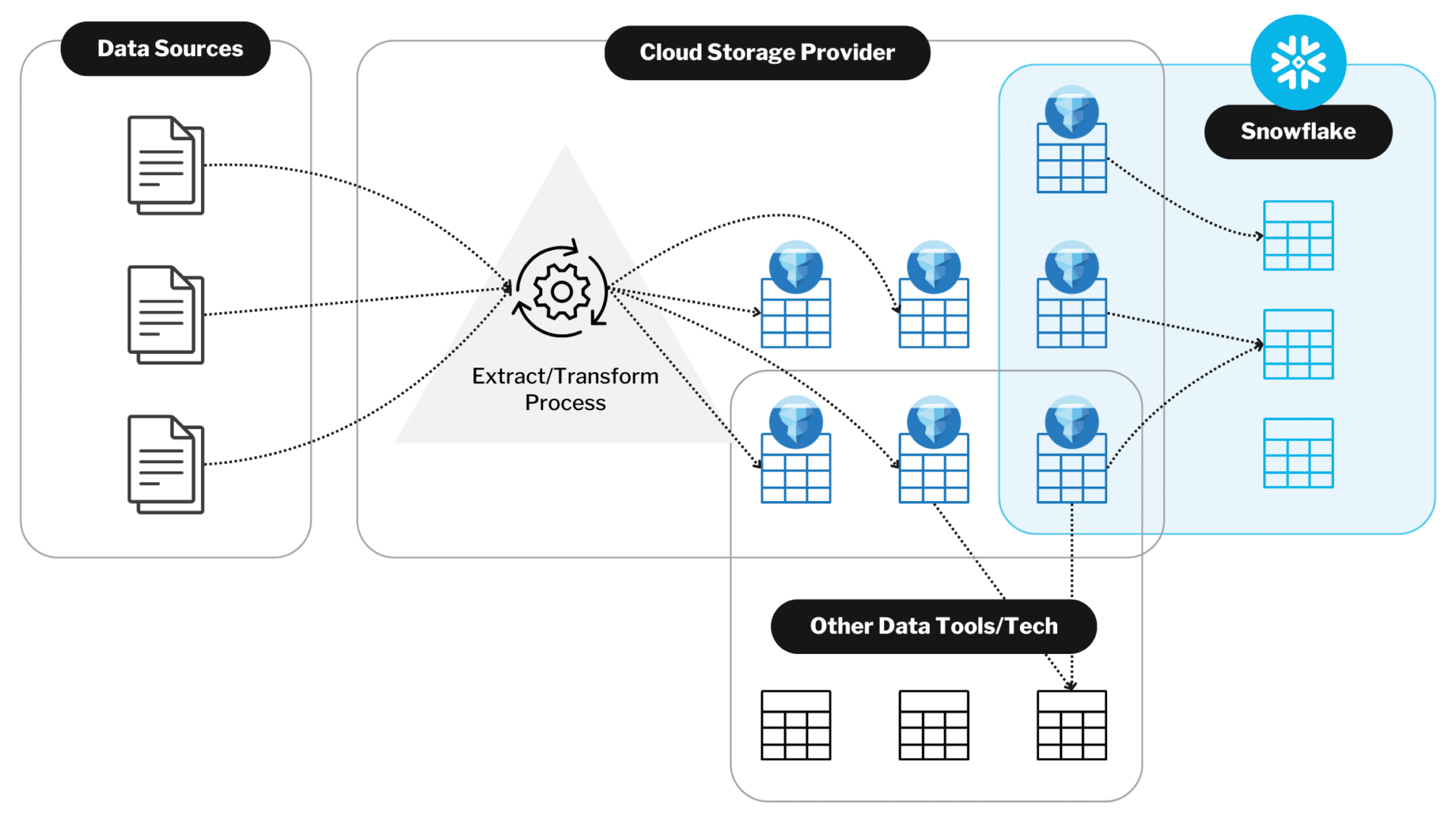

Data Collection & Processing

We started by gathering internal data—photos of the OneSix team acting out specific moods. These images were passed through AWS Rekognition to crop the headshots and stored in an S3 bucket.

But to train a meaningful model, we needed more. We augmented our dataset with hand-picked stock photos and even generated synthetic headshots using GPT-image-1 to ensure variety and balance across moods.

Model Training in LandingLens

Training our mood detection model involved:

- Creating a LandingLens project through the Snowflake Native App.

- Uploading training data by mood for automatic labeling.

- Launching training runs with custom settings for split ratios, epochs, and image augmentation.

LandingLens made it easy to go from raw images to a trained model with its low-code interface and tight Snowflake integration.

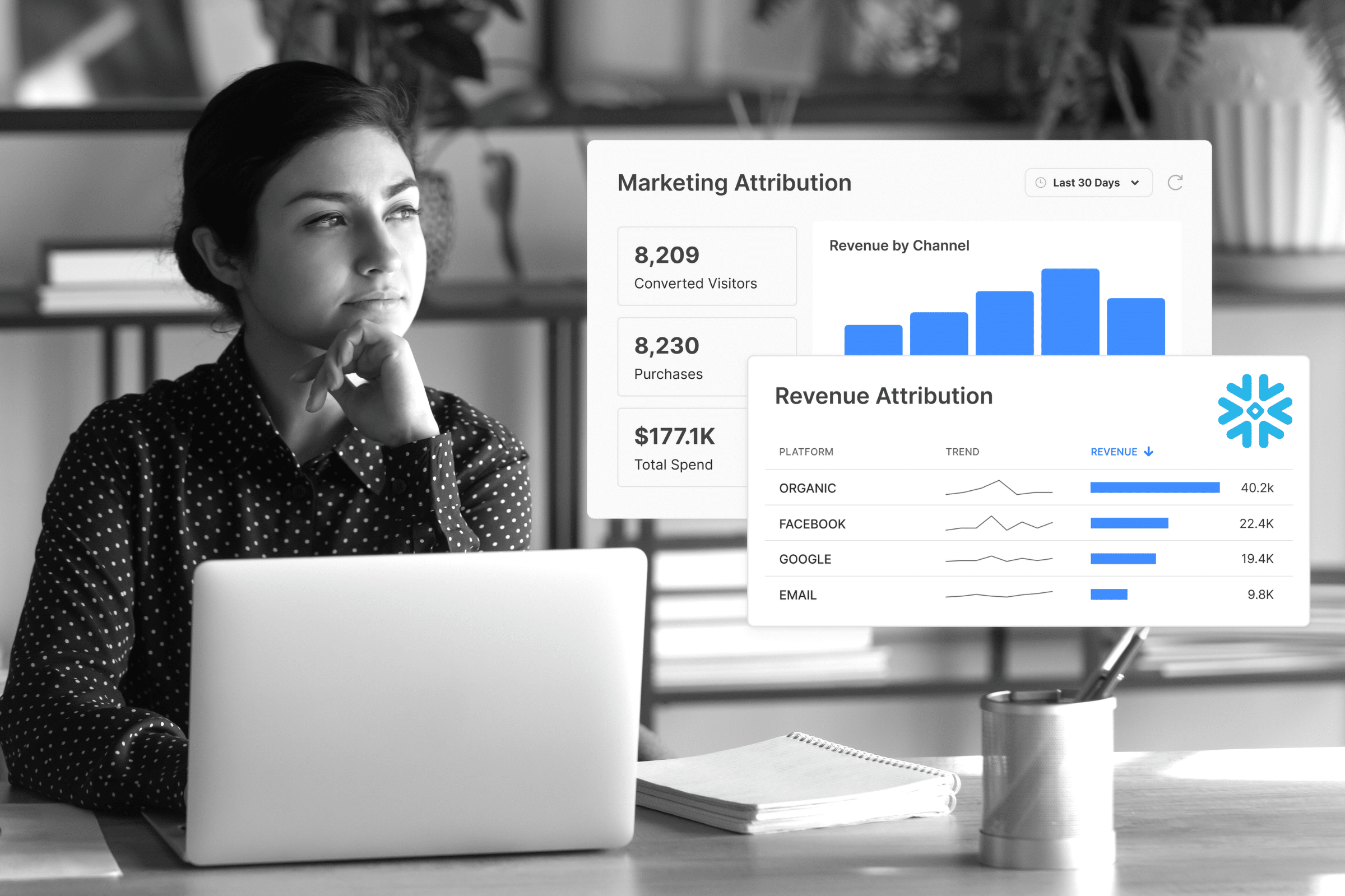

Real-Time Inference & Dashboarding

When an attendee took a photo:

- The image was cropped via AWS Rekognition.

- The cropped headshot was sent to the LandingLens model endpoint.

- The predicted mood was written to Snowflake.

- Sigma read directly from Snowflake to update our real-time dashboard—no lag, no duplication.

The entire process happened in seconds. One moment you’re smiling at the camera, the next you’re a dot on a live mood chart.

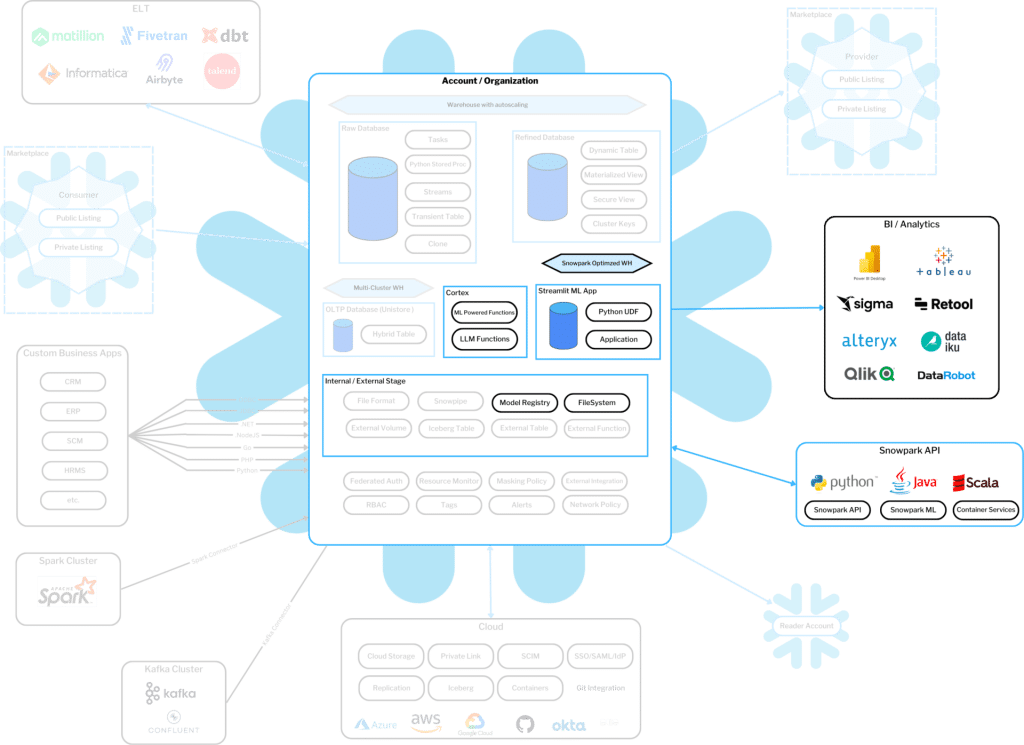

Why We Chose These Tools

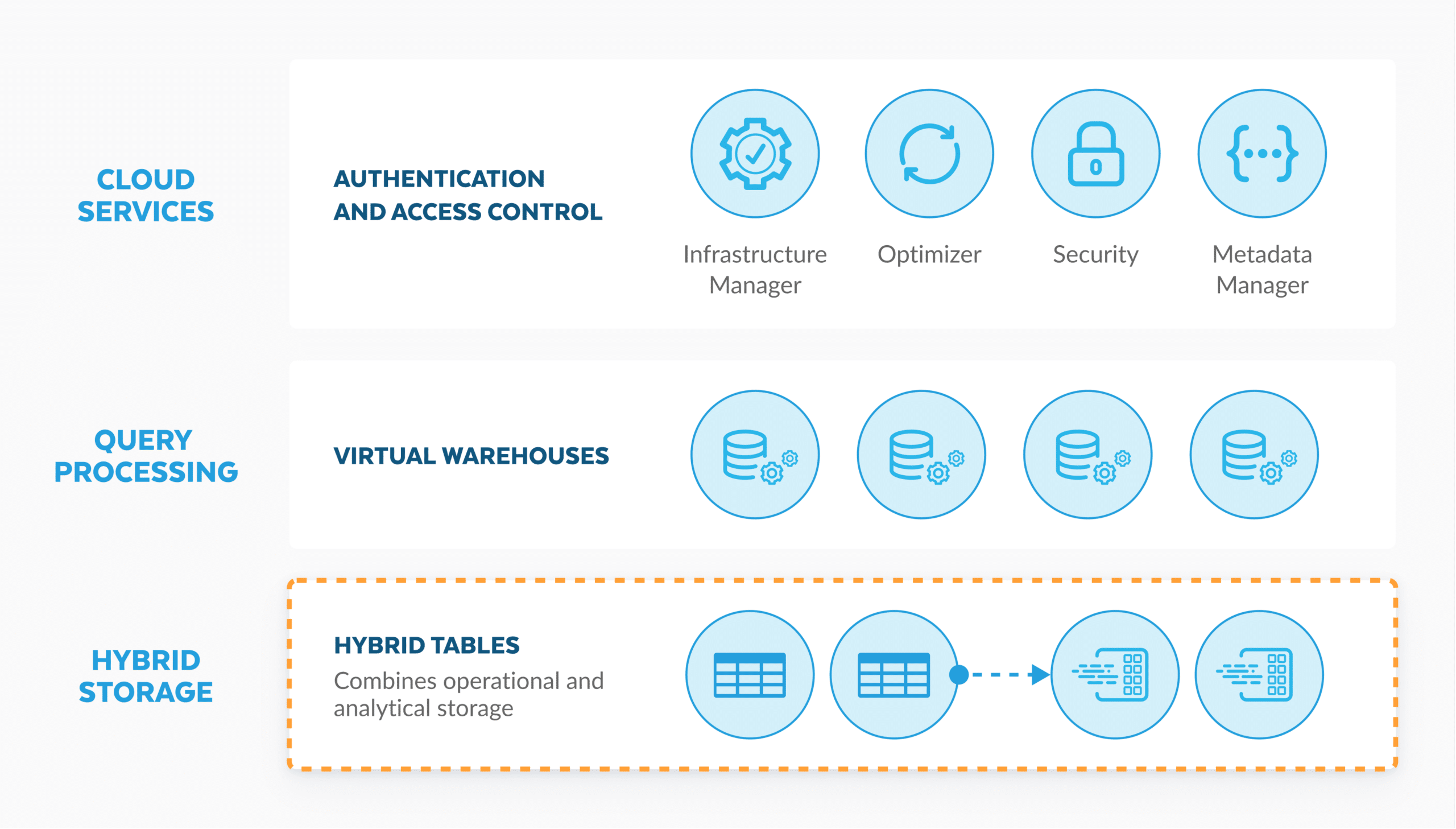

- Unified data platform for storage, compute, and ML integrations

- Central hub for all interactions—from inference to visualization

Landing AI's LandingLens

- Ideal for small, focused datasets

- Snowflake Native App keeps data and compute governed and secure

- Streamlines model development with a no-code interface

- Supports custom training and inference at scale

Sigma

- Real-time dashboarding directly from Snowflake

- No data extraction = stronger security and compliance

- Fast to build, easy to customize, visually compelling

The Takeaway

This wasn’t just a gimmick. It was a live demonstration of what’s possible when you combine the right technologies with a little creativity.

At OneSix, we believe data and AI should feel approachable and practical. This booth experience proved that smart, scalable, and governed AI applications don’t have to be intimidating—or boring. Thanks to everyone who stopped by to participate. We hope you had as much fun as we did. See you next year!

AI-Ready. Future-Focused.

Powered by Snowflake + OneSix.

Whether you’re just starting or scaling, OneSix helps you build intelligent data solutions with Snowflake it's core. We bring the strategy, architecture, and AI expertise to turn your data into real business outcomes.

Click Here