Data Horizon: Innovation Recap & Top Trends for 2024

Written by

Ajit Monteiro, CTO & Co-Founder

Published

December 15, 2023

Data Technology Innovation Recap

In 2023, “Artificial Intelligence (AI)” transitioned from a mere buzzword to a tangible reality. Snowflake’s focus on Apps and AI led to strategic acquisitions and significant product updates, while Matillion introduced its cloud-native Data Productivity Cloud and Power BI introduced new features like CoPilot and DAX Query View. Explore how these advancements are transforming data analytics and intelligence.

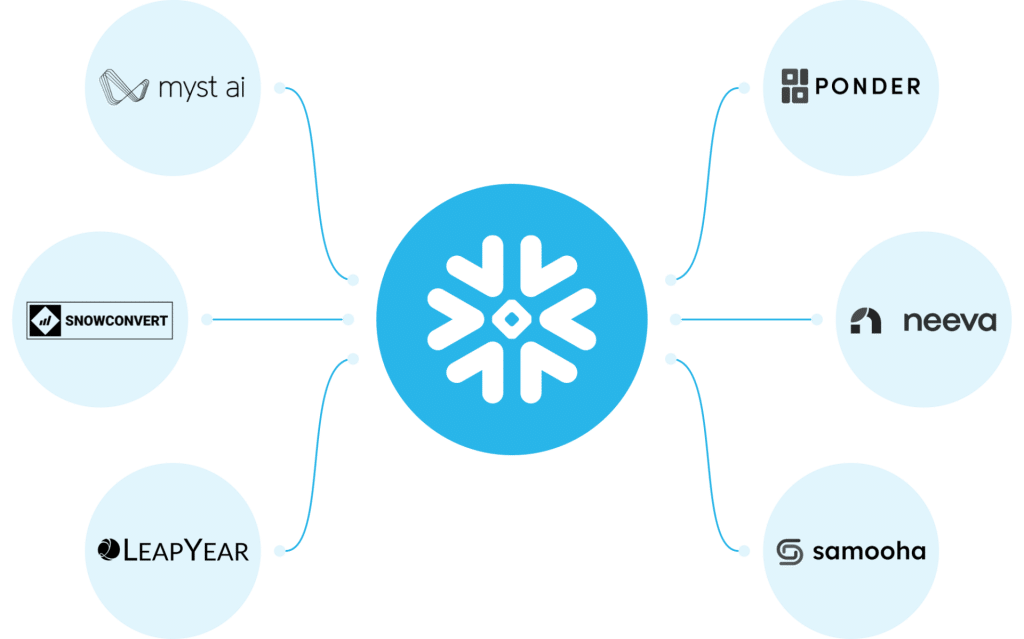

Snowflake Updates

This year, Snowflake made several acquisitions to improve their AI, data sharing, migration, and python offerings.

Myst AI

Myst AI for its time series forecasting abilities; crucial for optimizing business operations like supply chain management and financial planning

SnowConvert

SnowConvert to bolster Snowflake’s migration toolkit, significantly easing the transition of legacy databases to Snowflake’s cloud data platform

LeapYear

LeapYear to incorporate differential privacy into Snowflake’s services, enabling secure data collaboration with mathematically proven privacy protection

Ponder

Ponder to enrich Snowflake’s data capabilities with Ponder’s expertise in bridging data science libraries and scalable data operations, fostering enhanced analytics and machine learning functionalities

Neeva

Neeva to integrate advanced, privacy-focused search capabilities into Snowflake’s data cloud, enhancing user experience and competitive edge in data analytics

Samooha

Samooha to accelerate our vision for removing the technical, collaboration, and financial barriers to unlocking value with data clean rooms

Improved Python Support

(Generally Available): Snowflake has notably enhanced its Python support, allowing data scientists and engineers to integrate Python tools and libraries with Snowflake’s platform seamlessly. This enhancement facilitates efficient data analysis and machine learning directly within Snowflake, leveraging Python’s widespread popularity in the data science community to maximize the platform’s data analytics capabilities.

Why it matters: Allows you to use Python inside of Snowflake, including in user-defined functions, and workbooks.

Snowflake Native Apps Framework

(Available on AWS, Private Preview on Azure): Snowflake has expanded its ecosystem by introducing native applications, a move that significantly enriches the functionality and flexibility of its cloud data platform. These native apps, developed either by Snowflake or third-party developers, are designed to operate seamlessly within Snowflake’s environment, offering users a range of tools and services directly accessible within the platform.

Why it matters: Allows for developing and publishing data applications inside of Snowflake.

Streaming and Dynamic Tables

(Open Preview): Dynamic Tables allow users to define transformation logic with a simple, declarative SELECT statements, and Snowflake will automatically keep the table up-to-date, with cost-efficient incremental updates, a custom-defined refresh schedule.

Why it matters: Dynamic tables removes the need for some customers to maintain their own incremental update ETL framework.

Support for Git Integration

Why it matters: Allows development teams to work simultaneously on a codebase while tracking and managing changes efficiently.

Notifications for Better Observability

(Generally Available): The new SYSTEM$SEND_EMAIL() system stored procedure can be used to send- email notifications. You can call this stored procedure to send an email notification from a task, your own stored procedure, or an interactive session.

Why it matters: Allows for data-driven email notifications for use-cases like KPI thresholds, data observability, access monitoring, etc.

External Network Access

(Open Preview): You can create secure access to specific network locations external to Snowflake, then use that access from within the handler code for user-defined functions (UDFs) and stored procedures. You can enable this access through an external access integration.

Why it matters: Allow or block specific network access to Snowflake, as well as call external APIs from Snowflake.

Snowpark Container Services

(Expected Release in Summer 2024): Enables developers to deploy, manage, and scale generative AI and full-stack apps, including infrastructure options like GPUs, securely within Snowflake. This service broadens access to third-party services like LLMs, Notebooks, MLOps tools, and more.

Why it matters: Allows you to host code from any language inside of Snowflake, and make sure your data that is used by the code doesn’t leave the Snowflake cloud.

Large Language Models and Document AI

(Expected Release in Summer 2024): A new LLM developed by Snowflake, built from the acquisition of Applica’s generative AI technology, helps customers extract insights from unstructured data without needing machine learning expertise

Why it matters: Easy access to document-based machine learning.

Matillion Updates

(Expected Release in Summer 2024): A new LLM developed by Snowflake, built from the acquisition of Applica’s generative AI technology, helps customers extract insights from unstructured data without needing machine learning expertise

Data Productivity Cloud

Matillion released its Data Productivity Cloud product, which is a cloud-native version of its software.

Why it matters: In addition to enhanced functionality, moving to the SaaS version of Matillion will remove the need to host and manage their own instance, allowing more time to focus on business value.

LLM-enabled Data Pipelines

In Q1 2024, Matillion expects to launch an AI Prompt component, allowing users to augment their data pipelines with GenAI.

Why it matters: Companies with an existing Matillion implementation may find this feature to be an easy route to proof-of-concept, before investing heavily in a custom LLM integration.

PipelineOS

Announced in July 2023, PipelineOS is the key innovation in Matillion’s new Data Productivity Cloud. The stateless, microservice architecture allows for both horizontal and vertical scaling.

Why it matters: Understanding the concept of PipelineOS will better equip customers to transition to the SaaS platform.

Microsoft Updates

Power BI continues to redefine the landscape of data visualization with its new features, including CoPilot and the DAX Query View. Additionally, Microsoft released new branding for all of their data-related tools: Microsoft Fabric.

CoPilot in Power BI

Using Copilot, you can simply describe the visuals and insights you’re looking for, and Copilot will do the rest. Users can create and tailor reports in seconds, generate and edit DAX calculations, create narrative summaries, and ask questions about their data, all in conversational language.

Why it matters: Allows you to create dashboards using natural language, minimizing the need for a analytics engineer, especially for ad-hoc use cases.

DAX Query View

A new feature that provides powerful querying capabilities, enhancing data analysis and insights derivation.

Why it matters: Gives you access to writing DAX queries inside of PowerBI without any external tools.

Microsoft Fabric

Announced in May 2023, Microsoft Fabic is the new branding for all data-related tools in the Microsoft ecosystem (e.g. Data Factory, PowerBI, Synapse, etc.).

Why it matters: Currently, Snowflake and Databricks are considered to be the industry leading data cloud platforms, and Fabric is Microsoft’s response to those tools.

Data Trends and Predictions for 2024

In the ever-evolving landscape of business intelligence, several key trends are reshaping how organizations perceive, manage, and leverage their data assets. From the imperative of AI-ready data to the paradigm shift of treating data as a product, these trends encapsulate transformative approaches driving the future of business analytics. As businesses head into 2024, understanding and adapting to these trends is paramount for sustained growth and innovation. Let’s delve into each trend, uncovering their significance and impact for modern data organizations.

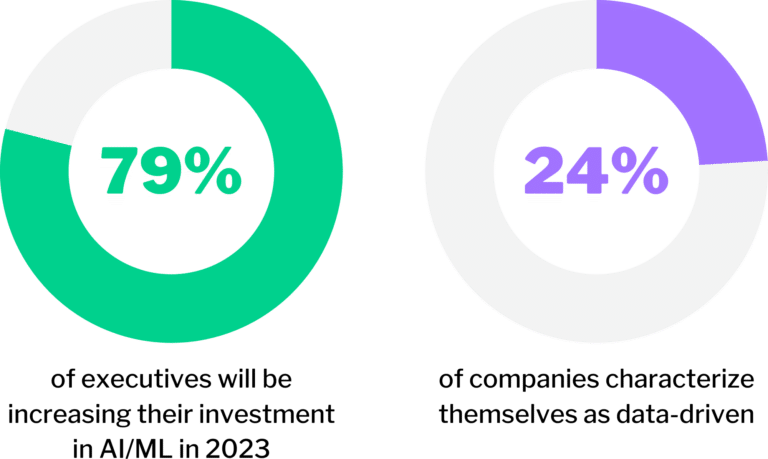

Trend 1: AI-ready data

If your data isn’t ready for AI, then your organization isn’t ready for AI. Before jumping on the AI bandwagon, companies need to take a step back and assess their data landscape. An AI algorithm’s effectiveness is tied to the quality of data it processes.

To help your organization navigate the complexities of preparing your data for AI, OneSix has built some free tools and resources:

Check out our comprehensive Roadmap to AI-ready Data to get familiar with the core steps

Take our 5-minute AI Readiness Assessment to evaluate your organization’s data maturity; this will be the basis for determining how to accelerate your journey to AI readiness

Trend 2: Unified business visibility

Trend 3: Data as a product

The evolving market trend emphasizes a transformative shift in how data is perceived— treating data as a product. This involves ensuring datasets are discoverable, addressable, self-describing, interoperable, trustworthy, and secure. The consumers of these datasets can then be other departments and organizations through data sharing technologies. This shift presents an opportunity for organizations to enrich their internal repositories by augmenting them with externally published datasets.

These external sources, such as economic indicators, population statistics, or weather data, offer a chance to enhance the depth and breadth of insights, empowering businesses with a more comprehensive and diverse informational landscape to drive informed decision-making and innovation.

Trend 4: Vector databases

Vector databases are rapidly emerging as a highly efficient storage solution tailored for the demands of AI, big data applications, and the long-term memory requirements of Large Language Model use cases. They excel at similarity-based searches, proving invaluable for critical functions like image recognition, recommendation systems, and intricate data comparisons.

What sets vector databases apart is their remarkable scalability and rapid data processing capabilities, making them ideal for real-time operations and large-scale applications. Their adaptability and speed render them not just as a viable option but as a crucial asset in the evolving landscape of data storage and utilization.

Gear up for a transformative year ahead

As we head into 2024, the world of data and AI technology is poised for significant transformation. The trends witnessed in 2023—AI-ready data, unified business visibility, cloud-first approaches, data-as-a-product paradigm, and real-time analytics—carry profound implications for businesses across all industries. Understanding and jumping into these trends is a must for any business aiming to stay ahead in this fast-moving world of data and AI.